Research

My recent work explores generative 3D world models, specifically diffusion-based occupancy prediction for exploration, mapping, and long-horizon planning in mobile robots. I develop algorithms and AI models that enable mobile robots to perceive, predict, and act effectively in complex, partially observed, and egocentric environments. My work focuses on: multi-modal machine perception for 3D scene understanding, generative occupancy modeling, and Vision–Language–Action (VLA) models that unify perception, language, and control for grounded decision-making.

Publications

RF-Modulated Adaptive Communication Improves Multi-Agent Robotic Exploration

- In Review - 2026

- Read our pre-print manuscript here.

Robust Robotic Exploration and Mapping Using Generative Occupancy Map Synthesis

- Accepted at Autonomous Robots (Springer Journal) - January 2026

- Springer Autonomous Robots Journal.

- Check out the pre-print manuscript here.

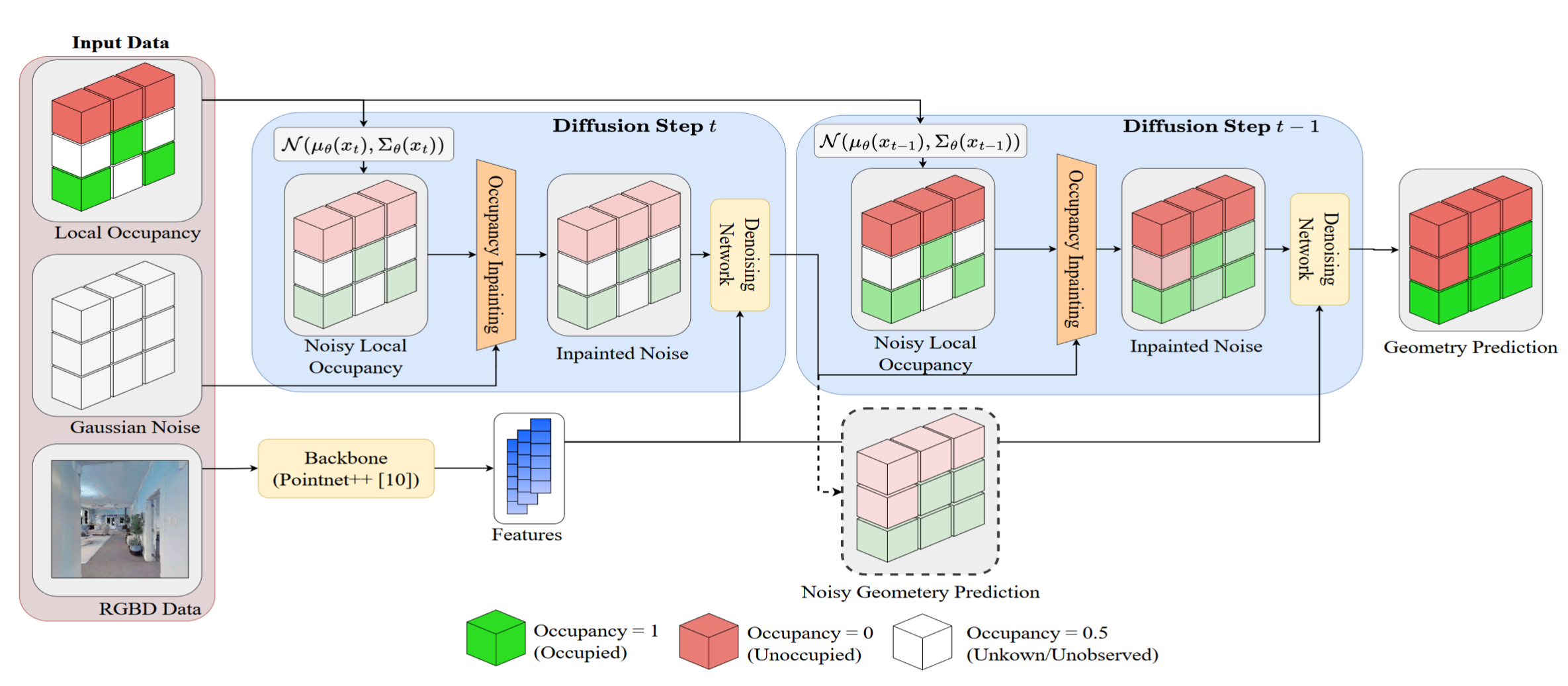

Online Diffusion-Based 3D Occupancy Prediction at the Frontier with Probabilistic Map Reconciliation

- Accepted to ICRA 2025: Check out the pre-print manuscript here.

- Read the published version on IEEE Xplore

- Paper Webpage

SceneSense: Diffusion Models for 3D Occupancy Synthesis from Partial Observation

- Accepted to IROS 2024: Read our pre-print manuscript here.

- Read the published version on IEEE Xplore

- Paper Webpage

Projects and Presentations

Advancing Robotics with Vision-Language-Action Models

Sim-to-Sim Transfer Framework

- Developed a simulation-to-simulation transfer pipeline to validate control policies across different physics engines (comparing IsaacSim with MuJoCo).

- Read the project write-up here

Vision Language Models: PaliGemma from Scratch

- Recreated the PaliGemma Vision-Language Model (VLM) architecture entirely from scratch using PyTorch.

- Implemented the complete model structure to deepen understanding of multimodal integration and large-scale model design.

- View the GitHub repo here